AI adoption is rising, but trust in responsible governance is eroding. For corporate leaders, this trust gap is transforming AI risk into a reputational and financial challenge – one that cannot be addressed solely through compliance. This article outlines the problem and how Value-Based Engineering (VBE) helps organizations engineer responsibility from the start.

For corporate leaders, reputational risk in responsible technology is now a material business risk with measurable financial impact. An EY survey found that 99% of organizations surveyed have experienced financial losses from AI-related risks, with nearly two-thirds incurring losses exceeding US$1 million. The most common risks are regulatory non-compliance and biased outputs. Interestingly, these are also the risks most likely to become public and undermine stakeholder trust, showing that weak AI controls are already translating directly into not only financial damage, but also reputational damage.

Responsible AI and the New Reputational Risk

At the same time, a trust gap is widening. According to the Stanford HAI AI Index, global confidence in AI companies’ ability to protect personal data declined from 50% in 2023 to 47% in 2024, alongside a decrease in the belief that AI systems are fair and unbiased. Yet optimism about AI products and services continues to rise, particularly in major markets such as the US, UK, Germany, and France, where positive sentiment has increased by up to 10 percentage points since 2022. The message here is clear: stakeholders want and rely on AI, but they are increasingly doubting that organizations can deploy and govern it responsibly.

This disconnect makes reputational risk an always-on exposure. As AI systems are embedded into core products and decisions, even minor failures can escalate rapidly – from technical edge cases to viral headlines and regulatory scrutiny – particularly when fairness, privacy, safety, or misinformation are involved.

While compliance remains necessary, it does not guarantee reputational safety: organizations can meet formal requirements and still face backlash when systems misalign with stakeholder expectations through opaque decisions, biased outcomes, or lawful but intrusive data practices. In practice, reputational risk surfaces through familiar, repeatable patterns – fairness failures, privacy overreach, third-party breakdowns, and last-minute compliance scrambles. These incidents are rarely “unknown unknowns”; they reflect risks that could be recognized early and engineered for, but too often are not.

How Value-Based Engineering (VBE) Helps

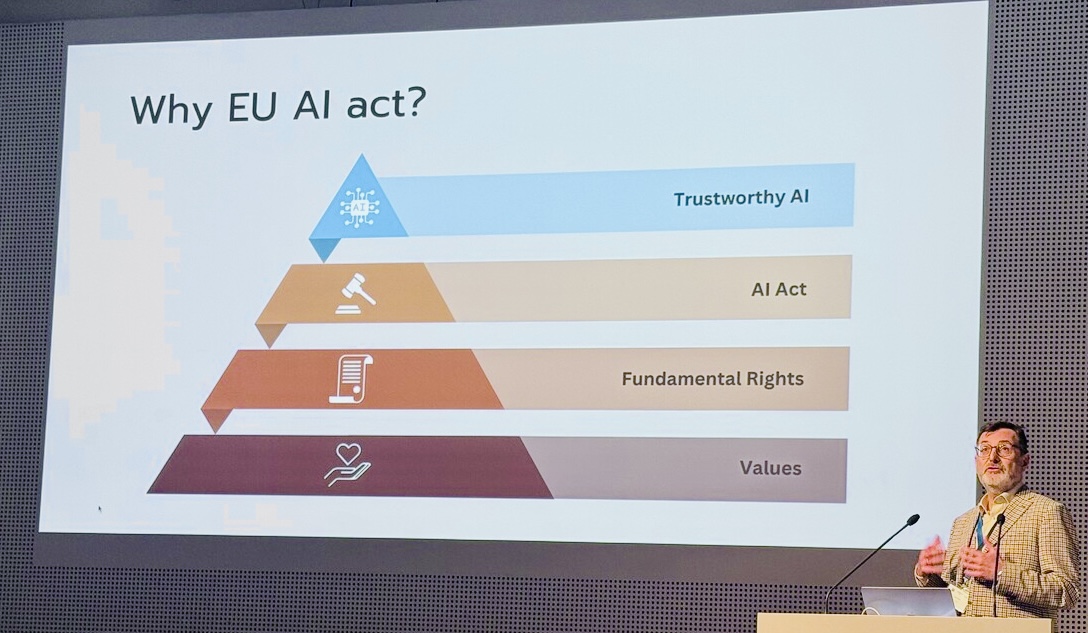

Value-Based Engineering (VBE) addresses reputational risk by closing the gap between what organizations say they value and how technology is actually designed, deployed, and governed. Instead of treating responsibility as a policy layer or late-stage compliance exercise, VBE integrates stakeholder values, risk considerations, and regulatory expectations directly into engineering and decision-making processes.

At its core, VBE helps organizations identify reputationally sensitive risks early and translate them into concrete requirements. Fairness, privacy, transparency, safety, and sustainability are not left as abstract principles; they are operationalized into design constraints, measurable criteria, and governance checkpoints that teams can act on. This makes known risks visible, actionable, and traceable throughout the lifecycle of a system—well before they become public incidents.

VBE also strengthens organizational defensibility. By creating clear links between stakeholder expectations, design choices, testing, and ongoing monitoring, leaders gain evidence for why decisions were made and how risks were addressed. When scrutiny arises—from regulators, customers, partners, or the media—organizations are better equipped to respond consistently and credibly, rather than reactively.

Ultimately, VBE shifts responsible technology from a reactive posture to a proactive capability. It helps organizations move faster without outrunning their safeguards, reducing the likelihood that predictable risks turn into reputational damage, financial loss, or loss of trust.

Building the Capability for Responsible AI

In conclusion, responsible technology is no longer judged solely by what organizations intend, but by what they can demonstrate under scrutiny. As AI adoption accelerates and trust becomes harder to earn, managing reputational risk requires more than policies or after-the-fact compliance—it requires the ability to recognize, prioritize, and engineer for risk from the start. The VBE Academy equips leaders and teams with practical training to turn values and regulatory expectations into concrete engineering and governance practices, helping organizations move faster without outrunning trust. Explore VBE Academy training to build the capabilities needed to develop responsible technology with confidence and credibility.