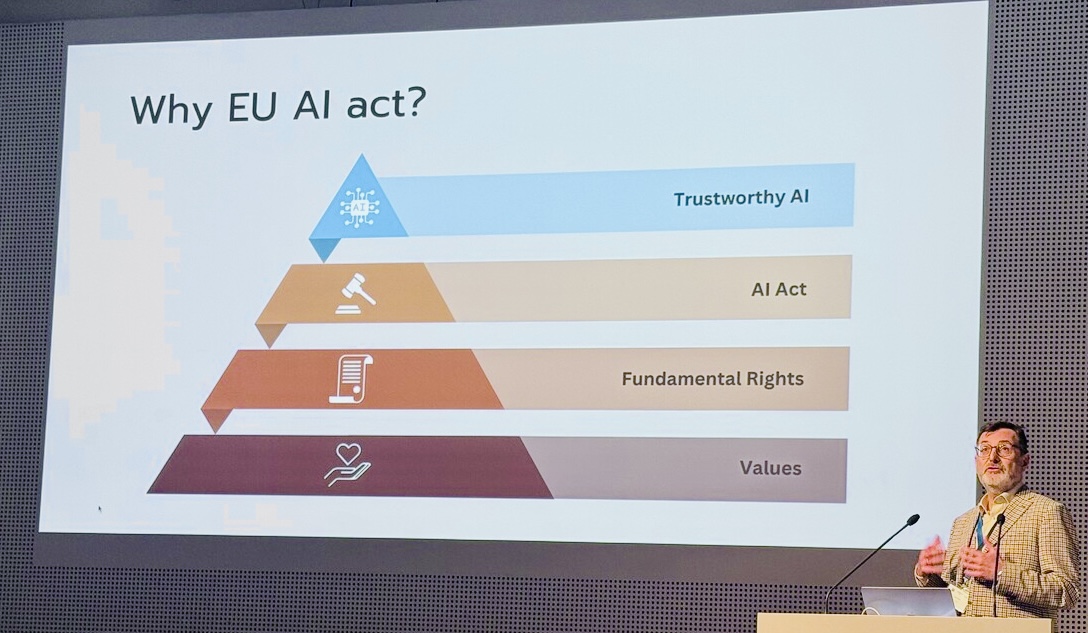

Artificial intelligence is advancing rapidly, and so are concerns about safety, fairness, and fundamental rights. In response, the European Union has introduced the EU AI Act, the world’s first comprehensive legal framework for AI. Its ambition is clear: to ensure that AI systems used in Europe are safe, trustworthy, and aligned with core European values.

But while the Act establishes strong and necessary obligations, many organizations face a practical dilemma: How can abstract principles—like dignity, autonomy, or fairness—be translated into concrete design decisions during system development?

This is where Value-Based Engineering (VBE) comes into play. Grounded in the IEEE 7000 standard, VBE offers a structured and practical methodology for embedding ethical considerations into engineering processes. It provides teams with tools to systematically identify stakeholder values, assess ethical risks, and transform these insights into actionable technical requirements.

Recognizing the need for such an approach, Lukas Madl, Soner Bargu, and Mert Cuhadaroglu of Innovethic explore this very question in their paper “Bridging Ethics and Regulation: How VBE Facilitates Compliance with the EU AI Act in High-Risk and General Purpose AI.” The paper is published in Springer’s open-access volume “Digital Humanism”—a collection of reviewed contributions that examine how digital technologies intersect with ethics, law, society, and interdisciplinary research. This blog post summarizes the key insights of their paper and explains what it means for organizations striving to build trustworthy, regulation-aligned AI systems.

What the EU AI Act Requires — and Why It’s Challenging

The AI Act classifies AI systems into four categories: minimal, limited, high, and unacceptable risk. The most stringent obligations apply to high-risk systems, which must meet detailed requirements in risk management, quality management, data governance, oversight, transparency, and documentation.

However, the paper highlights several real-world challenges in meeting these obligations:

1. Complex Risk Classification

Risk categories—especially those listed in Annex III—do not always reflect how AI behaves in practice. This is particularly true for general-purpose AI (GPAI) models, which can be repurposed for both beneficial and harmful uses. The regulation evaluates systems based on their intended use, but GPAI can be used in ways developers never anticipated

2. Lack of Harmonized Standards

Conformity with the Act is meant to rely on technical standards currently under development by CEN and CENELEC. But many of these standards are not yet available. This leaves organizations unsure about what “compliance” looks like in practice, especially regarding fundamental rights protection

3. Rapid Technological Change

Finally, the pace of AI innovation makes it difficult for regulation to stay ahead. The EU can update parts of the law through delegated acts, but practical guidance often lags behind technological reality. Developers are left navigating unclear expectations in a landscape that evolves monthly

In this context, organizations need structured methods that help them build trustworthy AI even as standards and guidelines evolve.

How VBE Complements the EU AI Act

Value-Based Engineering provides a systematic, lifecycle-oriented framework for identifying, analysing, and integrating human values throughout system design. Its process includes: Concept & Context Exploration, Ethical Values Elicitation, and Value-Based Design and Verification. The paper argues that VBE aligns remarkably well with the EU AI Act’s demands—and often goes further by offering practical, operational steps where the Act provides principles. Three parallel areas stand out:

1. Risk Management (Article 9)

VBE requires teams to identify ethical risks to stakeholder values, assess likelihood and impact, design mitigations, and verify residual risk—mirroring the Act’s high-risk system obligations.

2. Quality Management (Article 17)

VBE fits neatly into existing QMS structures by introducing Ethical Value Requirements (EVRs): traceable, testable requirements derived from stakeholder values. This supports documentation, justification, and oversight duties.

3. Fundamental Rights Impact Assessment (Article 27)

Through stakeholder analysis and value exploration, VBE naturally uncovers risks to dignity, autonomy, privacy, equality, and fairness. These insights can be directly used to fulfil FRIA obligations required for high-risk AI systems.

Why VBE Strengthens Ethical and Regulatory Compliance

The paper outlines four ways in which VBE not only supports compliance but also enhances the spirit behind the EU AI Act.

1. Going Beyond Principle Checklists

Static checklists like “fairness” or “transparency” are too generic and easily misapplied.

VBE instead uses a non-list approach, discovering the values that matter in each unique context. This prevents oversights and “checkbox ethics” that fail to address real risks

2. Deep Contextual Understanding

VBE requires teams to unpack values (e.g., dignity, autonomy) through ethical theory and stakeholder experience. The tragic case of a teenager harmed by an emotionally manipulative chatbot illustrates what happens when systems fail to consider psychological safety and user vulnerability. Such risks become visible during proper values analysis, not after deployment

3. Structured Value Elicitation and Translation

A major challenge in AI development is translating abstract values into concrete, testable requirements.

VBE does this through Value Qualities, Ethical Value Requirements (EVRs), and Traceable design controls.

This provides engineers with a clear, repeatable method for embedding ethics into design decisions.

4. Early and Continuous Stakeholder Engagement

Trustworthy AI requires genuine engagement with people affected by the system.

VBE integrates stakeholder consultation from the beginning and continues throughout development—ensuring that real-world concerns shape technical choices. This reduces risk, enhances social acceptance, and improves system quality.

Conclusion

Implementing the EU AI Act is not only a legal challenge—it is an engineering challenge. Organizations need tools that translate legal principles into actionable design processes. Value-Based Engineering provides exactly that.

By offering structured methods for ethical analysis, risk assessment, stakeholder engagement, and traceable requirement development, VBE helps teams build AI systems that are not only compliant but genuinely aligned with human values and societal expectations.

In an era where trust and accountability are central to AI governance, VBE stands out as a practical, future-ready approach for developing safe, ethical, and regulation-aligned AI systems.

Check out the full paper by Lukas Madl, Soner Bargu, and Mert Cuhadaroglu here.